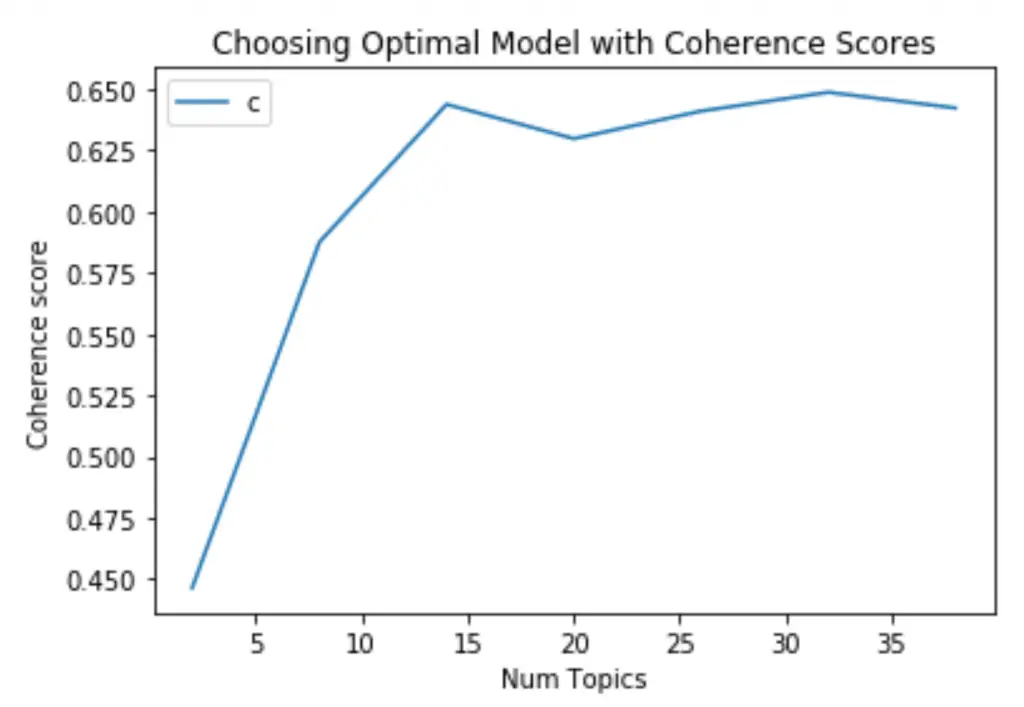

If using a metric, the parameters are still **kwds optional keyword parametersĪny further parameters are passed directly to the distance function. This is not discussed on this page, but in each. There are 3 different APIs for evaluating the quality of a model’s predictions: Estimator score method: Estimators have a score method providing a default evaluation criterion for the problem they are designed to solve. Pass an int for reproducible results across multiple function calls. Metrics and scoring: quantifying the quality of predictions. Topic models learn topicstypically represented as sets of important wordsautomatically from unlabelled documents in an unsupervised way. random_state int, RandomState instance or None, default=Noneĭetermines random number generation for selecting a subset of samples. Latent Dirichlet Allocation (LDA) is a widely used topic modeling technique to extract topic from the textual data. If sample_size is None, no sampling is used. The size of the sample to use when computing the Silhouette Coefficient The distance array itself, use metric="precomputed". If metric is a string, it must be one of the optionsĪllowed by _distances. The metric to use when calculating distance between instances in aįeature array. metric str or callable, default=’euclidean’ It is often defined as the average or median of the pairwise word-similarity scores of the words in that topic e.g., Pointwise Mutual Information (PMI). For this purpose, we’ll describe the LDA through topic modeling. It’s a type of topic modeling in which words are represented as topics, and documents are represented as a collection of these word topics. Parameters : X of shape (n_samples_a, n_samples_a) if metric = “precomputed” or (n_samples_a, n_features) otherwiseĪn array of pairwise distances between samples, or a feature array. Latent Dirichlet Allocation (LDA) is an unsupervised clustering technique that is commonly used for text analysis. Negative values generally indicate that a sample hasīeen assigned to the wrong cluster, as a different cluster is more similar. The best value is 1 and the worst value is -1. To obtain the values for each sample, use silhouette_samples. This function returns the mean Silhouette Coefficient over all samples. Hence, although we can calculate aggregate coherence scores for a topic model. Note that Silhouette Coefficient is only defined if number of labels This means that theres no way of knowing the degree of confidence in the metric. Review topics distribution across documents 16.

#SKLEARN LDA COHERENCE SCORE HOW TO#

How to see the dominant topic in each document 15. How to see the best topic model and its parameters 13. To clarify, b is the distance between a sample and the nearestĬluster that the sample is not a part of. Diagnose model performance with perplexity and log-likelihood 11. The Silhouette Coefficient for a sample is (b - a) / max(a, b). The Silhouette Coefficient is calculated using the mean intra-clusterĭistance ( a) and the mean nearest-cluster distance ( b) for each silhouette_score ( X, labels, *, metric = 'euclidean', sample_size = None, random_state = None, ** kwds ) ¶Ĭompute the mean Silhouette Coefficient of all samples.

0 kommentar(er)

0 kommentar(er)